Edge Computing Drivers: Overcoming Centralized Cloud Challenges in the 5G Era

Why Edge Computing Matters: The Issues with Centralized Cloud for Next-Gen Apps

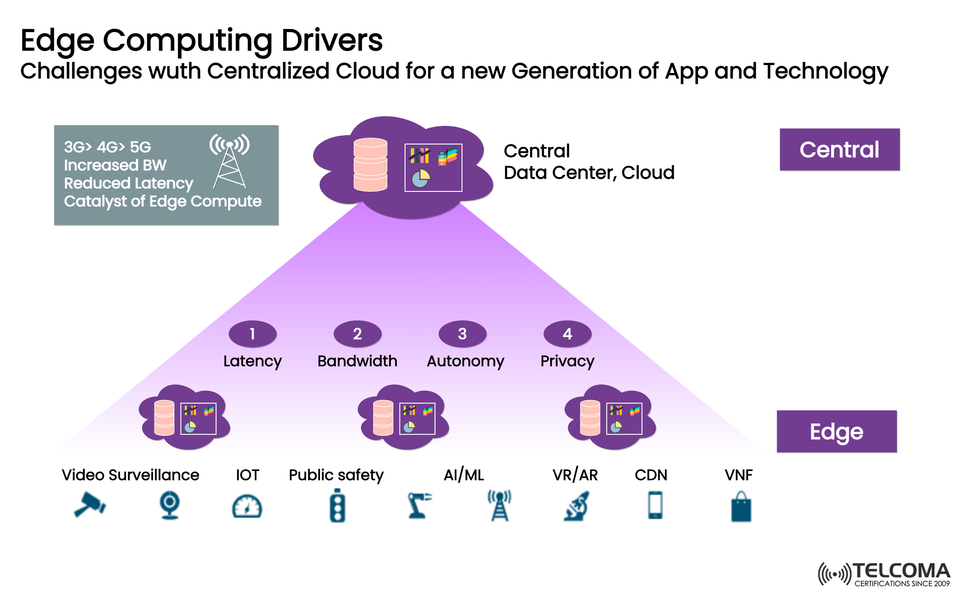

As we move from 3G and 4G to 5G, the surge of data-heavy applications—from IoT and AI/ML to VR/AR and video analytics—is changing what we need from networks and computing.

Although traditional centralized cloud setups have fueled the digital world for some time, they’re struggling to meet the demands of applications that need ultra-low latency, high bandwidth, and real-time decision-making.

That's where Edge Computing steps in. It decentralizes data processing, bringing computation closer to users or devices.

The image provided sums up the main challenges with centralized cloud models and highlights the four key drivers pushing us toward edge computing: latency, bandwidth, autonomy, and privacy.

What Is Edge Computing?

Edge computing is a distributed IT framework that shifts computation and data storage closer to where the data originates—think IoT devices, sensors, or local gateways—rather than just relying on distant cloud servers.

In simpler terms, instead of sending all data back to a far-off data center for processing, edge computing lets data be processed locally, right where it’s created.

This change helps cut down on network congestion, reduces latency, and lessens our reliance on centralized systems—making for nearly real-time responsiveness that’s crucial for 5G applications.

Challenges with Traditional Cloud Models

Before we get into the drivers for edge computing, it’s essential to recognize the limitations of the conventional cloud models shown in the image.

Latency

Data has to travel long distances to reach centralized data centers, and even a tiny delay can mess up time-sensitive operations like:

Self-driving cars needing to make split-second decisions

Industrial robots coordinating on the factory floor

Remote surgeries and real-time health monitoring

Latency is a major reason folks are turning to edge computing.

Bandwidth

The massive data generated by billions of connected IoT devices can choke network capacity. Sending raw sensor data or high-def video to the cloud nonstop can lead to:

Skyrocketing backhaul costs

Network congestion

Inefficient use of spectrum resources

With edge computing, we process data on-site, sending only relevant insights to the cloud, which saves bandwidth.

- Autonomy

Centralized clouds rely heavily on stable, fast internet connections. In critical situations (like public safety or remote mining), you can’t afford any downtime.

Edge computing enables local decision-making and autonomous operation, even if the connection to the main network is temporarily lost.

- Privacy and Security

Sending sensitive information—like health records or surveillance footage—to the central cloud puts it at risk for breaches or compliance issues.

Edge computing steps up data privacy by:

Keeping sensitive information local

Using encryption and anonymization at the source

Reducing exposure to risks associated with centralized systems

Breaking Down the Four Drivers of Edge Computing

The image highlights four main Edge Computing Drivers that are reshaping modern network designs.

- Reducing Latency

Edge computing cuts down latency by processing data close to where it’s created—whether that’s at a cell tower, base station, or even on a user’s smartphone.

A few examples include:

Video surveillance systems analyzing footage in real time for any anomalies.

Autonomous vehicles making prompt navigation choices based on sensor data.

Gaming and AR/VR applications offering lag-free, immersive experiences.

By avoiding the round trips to a central cloud, latency can drop from hundreds of milliseconds to under 10 ms in optimized 5G environments.

- Optimizing Bandwidth

Instead of sending huge volumes of raw data to centralized servers, edge computing processes and filters the data on-site.

Only the crucial or aggregated info is sent to the cloud.

This is particularly important for:

IoT networks, which have thousands of sensors constantly generating telemetry data.

CDNs (Content Delivery Networks) that store and deliver content close to users.

The outcome is that:

Bandwidth usage drops significantly.

Core network congestion is lessened.

Service costs go down while performance rises.

- Autonomy and Local Decision-Making

In environments where real-time control and resilience are crucial, autonomy becomes vital.

In edge computing setups:

Systems can still operate even when the connection to the cloud is interrupted.

Local nodes can make real-time decisions using cached data or AI models.

A few examples include:

Public safety systems that can process alerts even if the network is down.

Manufacturing robots that keep working during network interruptions.

AI/ML analyses carried out locally on edge servers without depending on the cloud.

This ability is essential for mission-critical and remote applications, ensuring reliability and continuous operation.

Privacy and Security

Edge computing lowers risk by keeping sensitive data local and minimizing cloud exposure.

Data like video feeds, health metrics, and user identifiers can be:

Processed and anonymized at the edge

Stored securely with local encryption

Shared selectively with central servers

Examples of this include:

Healthcare systems that keep patient information confidential

Video analytics for urban surveillance

Enterprise IoT that protects proprietary data from leaks

Centralized vs. Edge Computing: A Quick Comparison

Aspect Centralized Cloud Edge Computing Data Processing Location Far-off data centers Near the data source Latency High (50–200 ms)Very low (<10 ms)Bandwidth Usage Heavy Optimized Autonomy Dependent on connectivity Operates independently Privacy Data travels through the network Data remains local Best Use Cases Storage, analytics, backup Real-time IoT, AI, VR, and 5G applications

This comparison shows why many industries are increasingly leaning towards distributed computing architectures that blend cloud and edge capabilities.

Edge and Cloud: A Collaborative Future

It's also worth mentioning that Edge Computing doesn’t replace the cloud—it complements it.

In today's cloud-edge continuum, both systems work hand in hand:

The Edge takes care of real-time processing, quick decisions, and privacy-sensitive tasks.

The Cloud manages heavy computation, storage, and AI model training.

This hybrid approach guarantees scalability, reliability, and smart performance across networks.

Wrapping Up

The rise of 5G and advanced digital applications has laid bare the limitations of traditional centralized cloud models.

As shown in the image, issues regarding latency, bandwidth, autonomy, and privacy have become significant drivers for edge computing.

By shifting computing closer to users and devices, Edge Computing provides the speed, reliability, and data security necessary for this new wave of AI-powered, real-time, and mission-critical services.