Flexibility in CU-UP Deployment: Optimizing 5G Core for eMBB and URLLC Services

Flexibility of CU-UP Deployment Supporting Multiple 5G Service Needs

The 5G ecosystem has evolved in a way that has demanded increasingly flexible and scalable architectures that accommodate drastically different service requirements for applications, like enhanced Mobile Broadband (eMBB) and Ultra-Reliable Low Latency Communications (URLLC). One of the strongest enablers of being flexible in the architecture is being able to deploy the CU-UP (Centralized Unit - User Plane) in multiple ways.

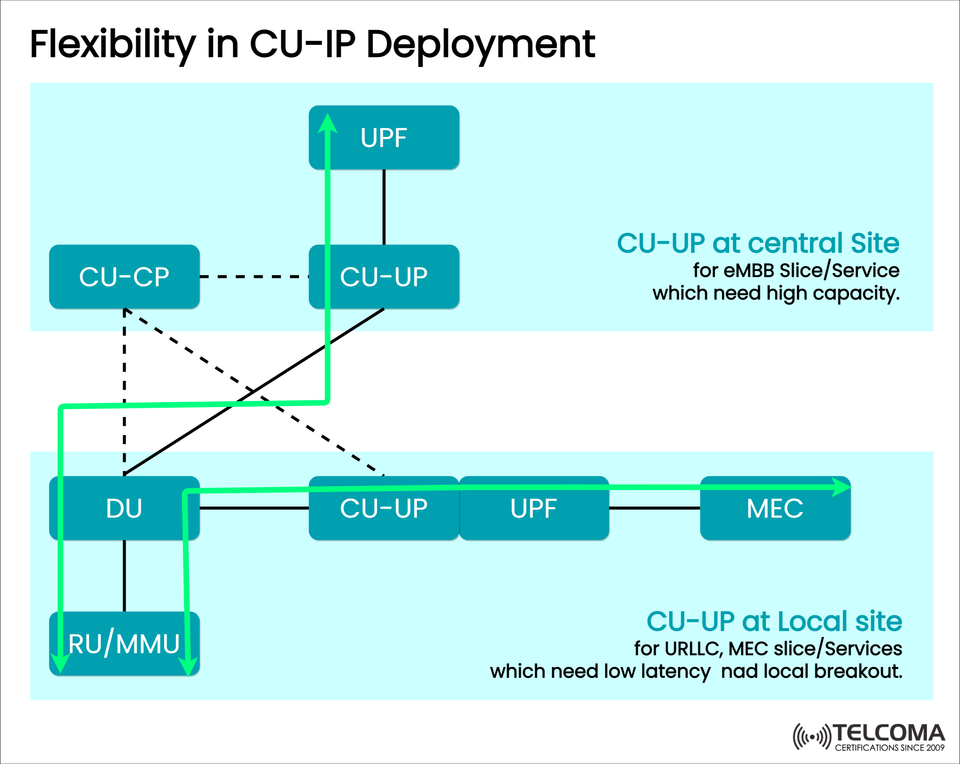

While this is not a new concept, the image from Telcoma shows two prominent deployment options of CU-UP in a 5G architecture:

CU-UP is located at the central site for high capacity eMBB services

CU-UP is local to support low latency URLLC and possibly MEC-based applications

We'll start to unpack this architecture and its advantages.

Understanding CU-CP and CU-UP in 5G Architecture

In a disaggregated 5G architecture, the Central Unit (CU) is separated into:

CU-CP (Control Plane): Takes care of signaling and control functions.

CU-UP (User Plane): Takes care of user data traffic.

By separating these two components, operators will be able to scale and place the User Plane (CU-UP) as close as possible to the location where service requirements demand, either at the central or neighborhood (edge).

CU-UP at Central Site (For eMBB)

Use Case

Enhanced Mobile Broadband (eMBB) slices or services that have a demand for high throughput capabilities, but can take some delay in service and aren't latency sensitive.

Architecture Characteristics:

The CU-CP and CU-UP are housed in a centralized data center.

The CU-UP connects to a central UPF (User Plane Function).

Best for capacity oriented applications like video streaming, cloud gaming, AR/VR.

Advantages:

Centralized compute/storage can be leveraged efficiently.

High-volume data can be more efficiently managed.

Ideal for dense urban environments with massive demand.

🏠 CU-UP at the Local Site (For URLLC & MEC)

Use Case:

A URLLC and MEC slice with ultra-low latency and local breakout.

Architecture Characteristics:

The CU-UP is hosted at or near the local cell site.

Connects to the local UPF and edge MEC servers.

Provides a shorter data path with localized service processing.

Advantages:

Ultra-low latency for mission-critical services (e.g. self-driving cars, factory automation).

Local content cache and edge computing.

Improved reliability and responsiveness.

🔁 Central versus Local CU-UP Implementations

Feature Central CU-UP (eMBB) Local CU-UP (URLLC/MEC)

Place Centralized Data Center Edge or Local Site

Target Services High-capacity eMBB Low-latency URLLC, MEC

Latency Requirements Moderate Ultra-low

Related Functions Central UPF Local UPF + MEC

Example Applications Video Streaming, Cloud Gaming V2X, Smart Factories

🌐 Schemes with MEC and UPF

This flexible CU-UP is configured with:

MEC: a Multi-access edge computing enables apps to be closer to the users.

UPF: User Plane Function, routes user data (packets) to the internet or local servers

When a UPF and CU-UP are deployed locally, the architecture supports local breakout, enabling the delivery of traffic to local MEC services without traversing the core network.

🔍 The Importance of Flexible CU-UP Deployment

Improved Network Agility: Opportunities to deploy services where they are most needed.

Better Resource Utilization: Match compute and transport with service demands.

Flexibility for Network Slicing: The flexibility to deploy CU-UP against the requirements of each slice (for example, eMBB, URLLC, and IoT).

Support Multi-Vendor: Flexible deployments allow for Disaggregated and Open 5G architectures.

🔧 Planning for Flexible Deployment of CU-UP

To fully envision flexible CU-UP deployment into real-world telecom networks, there are several technical and operational concerns to be resolved

⚙️ Deployment Options and Considerations

Deployment Consideration Centralized CU-UP Site Localized CU-UP Site

Latency Sensitivity Tolerant latency use cases (eMBB, streaming) Delay sensitive use cases (URLLC, MEC, V2X)

Backhaul Requirements Requires high bandwidth backhaul to central location Low bandwidth backhaul required due to local breakout

Availability of Compute Resources Requires centralized cloud infrastructure Requires local edge compute and storage

Service Continuity Easier to manage broader coverage area To manage redundancy and failover at edge

Mobility Management Simplified centralized handover management Complexity associated with UEs moving between edge domains

🛠 Use Case Scenarios for CU-UP Placement

eMBB (Enhanced Mobile Broadband)

The centralized CU-UP must support large data flows as the applications need, for example, literally YouTube, Netflix, VR, etc. So, it's about capacity rather than latency.

URLLC (Ultra-Reliable Low-Latency Communications)

The localized CU-UP supports extremely low-latency use cases, for example, robotic surgery or autonomous driving. So, it's about latency and reliability.

MEC (Multi-access Edge Computing)

As with URLLC, it is possible to deploy CU-UP to maximize data localization and reduce core load and ultimately provide an easier and better experience for the end user.

Examples are smart retail, industrial automation, real-time analytics.

🔮 Future Outlook: CU-UP in 5G and Beyond

Flexible CU-UP deployment will further increase its importance as the networks move to 5G Advanced and 6G:

AI/ML-Based Network Slicing: Choosing CU-UP location automatically depending on QoS demand in real time.

Zero-Touch Provisioning: Deploying CU-UP functions naturally by leveraging orchestration frameworks (e.g., ETSI MANO or ONAP).

Open RAN Integration: Different CU-UP elements in cooperation with open, multi-vendor DU and RU layers.

Private 5G Networks: CU-UP deployed locally for enterprises that need ultra-low latency (e.g., ports, factories).

🧠 Conclusion:

CU-UP Flexibility Enables Service Differentiation

Flexible CU-UP deployment is not simply a design choice, but a fundamental consideration in 5G. When operators select the CU-UP location, they gain the ability to:

Maximize user experience based on slice type.

Proactively manage cost and latency trade-offs.

Effectively deploy MEC and local breakout.

Line up new networks for scalable, and automated service delivery.