Understanding the 3GPP Client Reference Architecture for VR DASH Streaming Applications

Exploring the 3GPP VR DASH Client Architecture: Shaping the Future of Immersive Streaming

Streaming Virtual Reality (VR) is among the most demanding applications when it comes to bandwidth and latency in upcoming networks. To provide smooth, high-quality VR experiences, the 3rd Generation Partnership Project (3GPP) has rolled out a standardized framework called the VR DASH client reference architecture.

This framework outlines how VR content gets streamed, decoded, and rendered effectively while responding to user inputs and applications in real time.

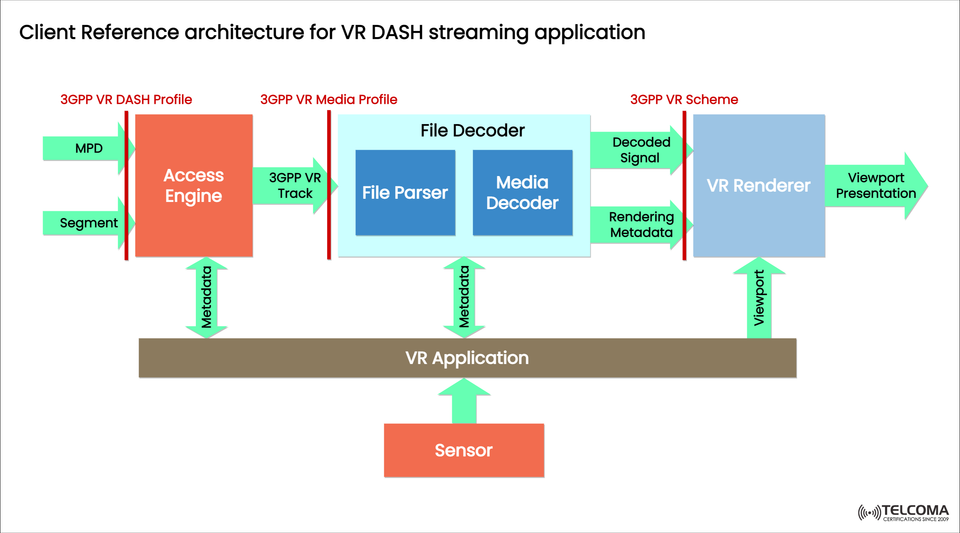

The diagram accompanying this text illustrates how the architecture operates, showcasing the data flow from accessing VR DASH content to the final presentation to the viewer, utilizing metadata-driven processing and adaptive streaming strategies.

Let’s take a closer look at each part to see how everything works together to create amazing VR experiences over 5G and beyond.

What Is VR DASH Streaming?

DASH (Dynamic Adaptive Streaming over HTTP) is a popular standard for streaming multimedia online. It breaks down content into small segments that can be chosen based on current network conditions.

When you apply this to VR (Virtual Reality), you get VR DASH, which adds a few extra layers of complexity:

Support for 360° video

Streaming based on the user's viewpoint

Real-time adjustments according to head movements and sensor data

To manage these complexities, 3GPP put together VR-specific profiles and architectures, ensuring that various VR devices and networks work well together.

Overview of the 3GPP VR DASH Client Architecture

As shown in the image, the architecture consists of three key functional blocks:

Access Engine – This part retrieves VR media segments.

File Decoder – It handles parsing and decoding the media for playback.

VR Renderer – This module renders the decoded stream into an immersive view.

All three components work in tandem with a VR Application layer and a Sensor subsystem for adaptive and real-time VR rendering.

Component Breakdown

A. Access Engine (3GPP VR DASH Profile)

The Access Engine is the starting point of the VR DASH client, tasked with fetching VR media data from the server using the 3GPP VR DASH Profile, which adds VR-specific information to standard DASH protocols.

Key Functions:

Requests and downloads media segments based on the Media Presentation Description (MPD) file.

Manages adaptive bitrate (ABR) selection — picking the best segment quality given the network conditions.

Extracts metadata from MPD files for VR-specific rendering (like spherical coordinates and tile mapping).

Sends 3GPP VR tracks to the next step (File Decoder).

Inputs & Outputs:

InputOutputMPD (Media Presentation Description)3GPP VR TrackMedia SegmentsMetadata to VR Application

This layer makes sure that only the necessary data — for instance, what’s visible in a 360° scene — is streamed to save bandwidth.

B. File Decoder (3GPP VR Media Profile)

After the Access Engine collects the VR track, the File Decoder steps in to process and decode it, following the 3GPP VR Media Profile.

This decoder has two main parts:

File Parser

Media Decoder

File Parser

Interprets container formats (like MP4-based VR segments).

Retrieves timing, synchronization, and spatial metadata.

Shares metadata with the VR application layer for rendering.

Media Decoder

Decodes audio and video bitstreams using VR-optimized codecs (like HEVC, VVC, or AV1 for 360° content).

Produces decoded signals that are ready to be rendered.

Outputs:

Decoded signal (set for display)

Rendering metadata (camera parameters, projection details)

Following this, the decoded content moves on to the VR Renderer, which creates those immersive visuals.

C. VR Renderer (3GPP VR Scheme)

The VR Renderer implements the 3GPP VR Scheme, guiding how decoded signals get mapped into the final VR viewport.

Core Functions:

Converts decoded frames into viewport-specific presentations driven by real-time head-tracking data.

Uses rendering metadata to adjust positioning, scaling, and orientation for the 360° content.

Works with the VR Application and Sensor subsystem for synchronized output.

Data Flow:

InputOutputDecoded SignalViewport PresentationRendering MetadataViewport PresentationViewport Info from VR ApplicationAdaptive Rendering

The renderer ensures that only the visible section of the 360° scene is shown, which helps improve processing efficiency and maintain a smooth frame rate.

Supporting Components

A. VR Application Layer

The VR Application serves as the main controller for all VR client functions. It takes in metadata from the Access Engine and File Decoder and uses sensor input (like head movements and positions) to guide the renderer about which viewport to display.

Functions include:

Managing user interactions.

Synchronizing the flow of metadata among modules.

Sending viewport and rendering instructions to the VR Renderer.

Offering feedback for quality optimization in streaming.

This layer guarantees smooth integration between media streaming and user experiences.

B. Sensor Subsystem

The Sensor module picks up real-world motion and orientation data from VR headsets, controllers, or cameras.

Key Functions:

Monitors user head movements, gaze direction, and positions.

Provides viewport updates to the VR Application.

Supports the dynamic adjustment of streaming focus (viewport-adaptive streaming).

By continuously relaying motion data, it enables real-time adaptive rendering — the heart of compelling VR experiences.

Data Flow Summary

To give a quick recap of the process depicted in the diagram:

StepProcessStandard/Interface1Access Engine retrieves MPD and segments3GPP VR DASH Profile2Media is parsed and decoded3GPP VR Media Profile3Renderer builds the viewport3GPP VR Scheme4VR Application integrates metadata and sensor feedbackVR Application Layer5Sensor sends head tracking dataViewport Metadata

This process represents the end-to-end flow of VR DASH content from server to display, keeping data streaming, decoding, and user movements synchronized.

Why 3GPP Standardization Matters

The 3GPP standardization of VR DASH architecture is crucial for:

Interoperability across various devices and vendors.

Optimized performance for both standalone and connected 5G VR headsets.

Quality adaptation under different network conditions.

Reduced latency, enhancing the immersive experience.

By setting profiles for DASH streaming, media decoding, and rendering, 3GPP connects traditional media delivery methods with immersive XR applications.

Benefits of VR DASH Client Architecture

Feature Benefit Adaptive Streaming Adjusts video quality on the fly to keep playback smooth. Viewport Rendering Directs processing only toward visible content. Metadata Integration Enables context-aware content decoding. Sensor Feedback Loop Allows real-time adaptation to user movements. Standardized Profiles Ensures compatibility and consistent performance.

These advantages make the architecture well-suited for 5G VR streaming, cloud gaming, and XR entertainment.

Application in 5G Networks

5G’s low latency and high bandwidth make it an ideal platform for VR DASH streaming. Key improvements include:

Edge computing to handle heavy decoding tasks efficiently.

Network slicing that guarantees bandwidth for VR applications.

MEC integration that helps to lower motion-to-photon latency.

Together with 3GPP’s standardized VR architecture, these technologies allow for truly real-time VR experiences.

Conclusion

The 3GPP Client Reference Architecture for VR DASH Streaming serves as a framework for delivering next-generation immersive experiences. By combining adaptive streaming, metadata-driven decoding, and real-time rendering, it ensures users enjoy smooth, high-quality VR content, even when network conditions fluctuate.

As advancements in 5G, edge computing, and AI-driven streaming optimization continue, architectures like this will be pivotal in the future of Extended Reality (XR) ecosystems, paving the way for realistic VR gaming, remote collaboration, and engaging entertainment.