Flexible CU/DU Deployment Strategies in 5G RAN: Enabling Latency-Specific Network Slicing

How CU/DU Deployment Enhances Latency-Aware 5G Network Slicing

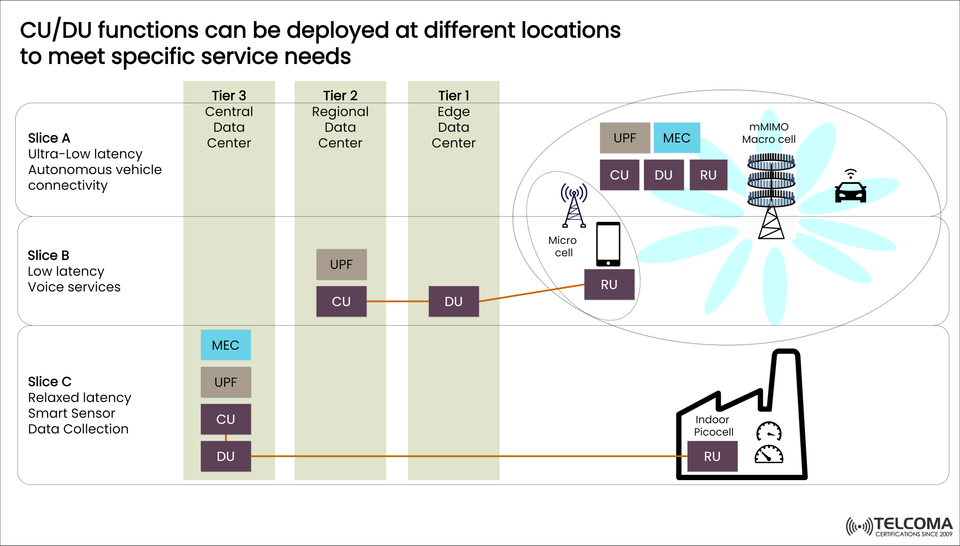

With the advent of 5G service delivery comes the obligation to deliver an immense array of services - from ultra-low latency communication with autonomous vehicles to higher latency IoT sensor collection. To address these unique needs, Centralized Units (CUs) and Distributed Units (DUs) can be coupled and deployed at multiple network tiers - Edge, Regional, and Central Data Centres whereas the edge enables low-latency service delivery. This structure provides flexibility and supports network slicing as operators can place CU/DUs within the network allocated per distinct Service Level Agreements (SLA).

The image above illustrates CU/DU functions spread around several network layers enabling 3 (three) slices: Ultra-low latency (slice A), low latency (slice B) and relaxed latency (slice C).

🧩 5G RAN functional split = CU, DU and RU

CU (Centralized Unit): Controls higher layer protocols, including PDCP and SDAP and is a relatively heavier unit which is generally deployed to regional or central data centers.

DU (Distributed Unit): Lower layer protocols for real-time processing, including RLC/MAC (and some parts of the PHY). DUs should be deployed as close to the RU as possible.

RU (Radio Unit): Conduct low-level RF processing and is physically located at the cell site (macro, micro, or picocells).

This split supports cloud-native architectures and space for expansive Multi-access Edge Computing (MEC).

🧠 Slice-Based CU/DU Deployment Scenarios

Slice Types Use Case Latency Needs Deployment Tier Component Exposure

Slice A Autonomous vehicles Ultra-low (<1ms) Tier 1 – Edge DC CU, DU, RU, MEC, UPF

Slice B Voice services Low latency Tier 2 – Regional DC CU, DU, RU

Slice C IoT sensor networks Relaxed latency Tier 3 – Central DC CU, DU,RU

🔹 Slice A: Ultra-low latency ( e.g., autonomous driving)

Softwar an;d CU, DU, and User Plane Function (UPF) are deployed at the edge. The MEC is included to minimized round-trip times and is needed due to the real-time nature of applications like V2X (Vehicle-to-everything).

🔹 Slice B: Low latency (e.g., voice services)

The CU is deployed in a regional data center while the DU is closer to the edge. This is recommended where you have services which are latency sensitive but not critical which would be similar to VoLTE/VoNR.

🔹 Slice C: Relaxed latency (e.g., smart sensors)

The DU and CU are located in a central data center, which would work well where time sensitive is not critical for the task, (e.g., reading smart meters, environmental sensors). This is using indoor picocells, but outdoor coverage for industrial IoT spaces will depend upon the markets needs.

📦 The Importance of MEC in Low-Latency CU/DU Deployments

Multi-access Edge Computing (MEC) brings the application processing closer to the user by incorporating compute at or near the DU location. MEC supports:

Data offloading from the core

Real-time Analytics and Response

Reduced congestion and backhaul consumption

MEC is also most evident in Slice A where automated decision-making and ultra-low latency processing is required.

🔄 Deployment Options: An Enabler of 5G RAN Evolution

With CU/DU/RU split configurations, and placing them strategically on the Tier 1 (Edge), Tier 2 (Regional), and Tier 3 (Central) infrastructures, this offers:

🔁 Scale: you can dynamically add slices and compute capacity

🏗️ Modular: only deploy what is needed, based on service demand

🧩 Customize: deploy for private networks or industry driven use cases

🔚 Conclusion

In today’s 5G RAN deployments, CU and DU function location is increasingly important when accommodating differentiated service requirements across network slices. From a deployment perspective, telecom operators can begin to realize the promise of 5G, flexibility, performance and efficiency, by matching deployment strategy against latency expectations, Edge for real-time, Central for non-critical. Utilizing MEC and UPF in the right tier of the network will allow for optimal use of resources, while maintaining user experience.

🧭 Strategic Takeaways for Telecom Practitioners

Whether you're a network or software architect, 5G engineer, or enterprise IT architect, here are key takeaways to remember:

✔️ Deployment Considerations:

Edge (Tier1) deployment is essential for ultra-reliable low latency communication (URLLC).

Regional (Tier 2) deployment is a good fit for latency-sensitive applications like voice.

Centralized (Tier 3) deployment is typically the cheapest option and is good for non-critical use cases (e.g., IoT data collection).

✔️ Business Implications:

Operators can monetize network slicing by providing differentiated SLAs.

Enterprise businesses can deploy private 5G with flexible CU/DU placement to meet specific use-case demands.

Vendors can design their hardware/software stacks to support modular and disaggregated RAN architectures.

✔️ Technology Trends:

Integration with Open RAN (O-RAN) architectures to allow for vendor-neutral deployments.

Utilization of AI/ML-powered xApps to enable intelligent orchestration at the RAN Intelligent Controller (RIC) layer.

Growth of Edge Computing to serve as an on-ramp for third-party applications, and to improve end-user experience.

🧰 Ready to Take the Next Steps?

Don't get left behind in the fast-changing telecom world; become an expert in flexible 5G RAN architectures! Knowing how to deploy CU/DU functions based on latency, bandwidth, and computing location will set you apart as a defining skill in the 5G decade.

🧩 Conclusion: The Future of Flexible RAN is Now

When looking at the maturing 5G landscape, the ability to optimally position Centralized Units (CUs) and Distributed Units (DUs) is the bedrock for offering differentiated services. Ultra-low latency autonomous vehicles, moderate latency voice services, and relaxed latency enterprise IoT networks must have their RAN functions orchestrated and located according to the performance requirements of each use case.

This architecture allows operators to:

Increase efficiency by only putting compute close to the user when necessary.

Reduce latency bottlenecks through the use of edge computing.

Optimize cost for performance given tiered data centers.

Through service aware CU/DU deployments, telcos and enterprises will be able to maximize the potential of network slicing, MEC and disaggregated RAN to enable the next wave of digital innovation.