Understanding Generic AI Native Architecture: The Foundation of Intelligent Telecom Networks

Generic AI Native Architecture: Crafting Smart, Self-Sufficient Telecom Networks

The telecom sector is rapidly moving towards 5G, 6G, and cloud-native evolution, and Artificial Intelligence (AI) has become crucial for boosting efficiency, automation, and smarter operations. To really harness the power of AI, companies need to adopt an AI native architecture — a multi-layered approach where AI isn’t just an extra feature, but an essential part of the network from the ground up.

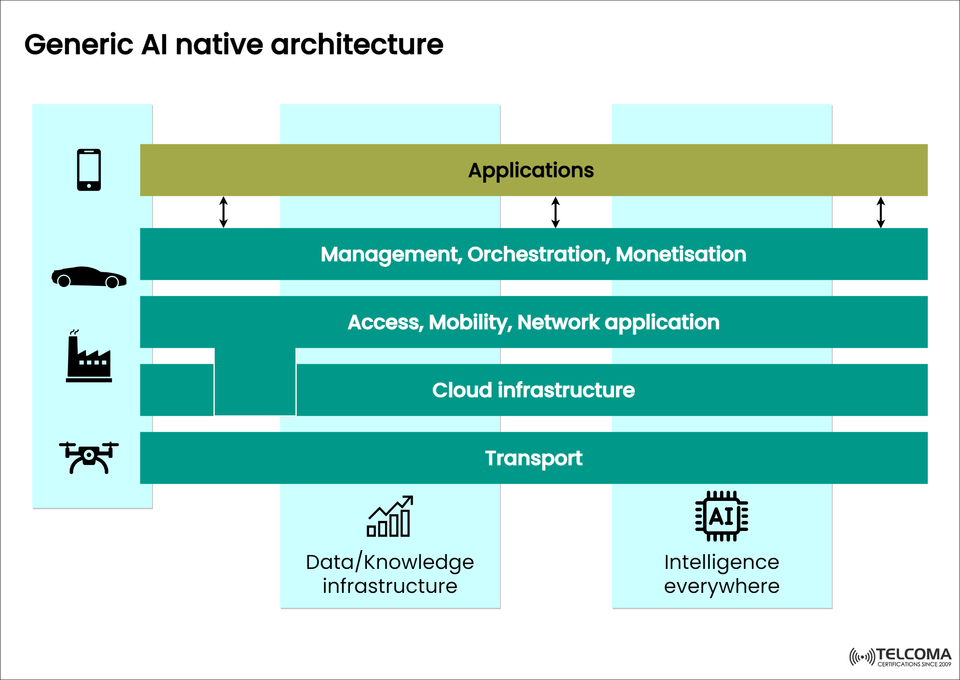

The Generic AI Native Architecture, displayed in the image above, lays out a clear framework to understand how intelligence touches every part of a telecom ecosystem — be it transport, cloud, management, or applications. This architecture enables complete automation, dynamic orchestration, and data-led decision-making, all of which are key for next-gen telecom operations.

What Is AI Native Architecture?

An AI native architecture is designed so that AI is woven directly into every layer of the network and operations, allowing for ongoing learning, adjusting, and optimizing.

Unlike older systems that see AI as a separate component, AI native setups embed intelligence directly into the infrastructure. This means networks and apps can manage themselves, optimize on the fly, and fix problems without needing human input.

Key Goals of AI Native Architecture:

Self-directed decision-making thanks to built-in intelligence

Scalable and distributed AI throughout all areas

Instant orchestration for cloud and edge setups

Streamlined monetization and innovation in services

In the telecom world, this kind of design makes sure that networks, platforms, and services adapt fluidly to user demand, varying traffic conditions, and operational targets.

- The Layered View of Generic AI Native Architecture

The Generic AI Native Architecture is broken down into six connected layers, each with its own role. Together, they create a fully intelligent, data-driven network environment.

Let’s take a closer look at each layer.

a) Applications Layer

At the top is the Applications Layer, home to end-user and enterprise applications. This includes:

Telecom management portals

Customer-facing applications

IoT apps (like connected cars, smart factories, drones)

AI analytics and visualization tools

This layer collaborates with the management and orchestration systems to provide:

Service assurance

Network analytics

Tailored user experiences

AI plays a key role here, making sure these applications are aware of context, adjusting dynamically based on data insights, user behavior, and performance indicators.

b) Management, Orchestration, and Monetization Layer

This acts as the control layer for automating networks and managing businesses.

Key Functions:

AI-backed orchestration: Automates service delivery, scaling, and resource management.

Closed-loop management: Identifies issues and corrects them in real-time.

Monetization strategies: Uses AI-driven insights to refine pricing, service models, and SLA adherence.

In telecom networks, this layer works alongside Service Orchestrators (SO), Network Function Managers (NFM), and AI Operations (AIOps) tools to achieve zero-touch automation.

With AI integrated here, the system can manage network health while dynamically optimizing costs and revenue.

c) Access, Mobility, and Network Applications Layer

This layer is all about the heart of telecom operations — managing connectivity, mobility, and user access across varied networks.

Key Capabilities:

Dynamic radio access management (RAN and O-RAN integration)

Mobility oversight across edge and core networks

Quality of Service (QoS) enforcement using AI predictions

Instant analytics for controlling congestion and reducing latency

AI here gives networks contextual awareness, allowing them to adjust things like transmission power, routing, and handovers on the fly, based on traffic and user mobility.

This adaptability is especially important for 5G and beyond, where vast numbers of devices – like IoT gadgets, self-driving cars, and smart city infrastructure – need reliable, low-latency connections.

d) Cloud Infrastructure Layer

The cloud infrastructure serves as the computational backbone for AI native systems.

Core Functions:

Virtualized computing, storage, and network resources

AI model hosting and training setups

Edge cloud deployment for ultra-quick AI processing

AI at this level allows for predictive resource scaling, failure detection, and automated workload shifts. For telecom operators, cloud-native AI means:

Smooth network slicing

Scalable 5G operations

Efficient energy use

This layer is where AI workloads execute, running inference engines and handling data across edge, core, and multi-cloud settings.

e) Transport Layer

The Transport Layer makes sure data moves efficiently across different network domains — from users and devices to cloud and edge resources.

AI Integration:

Traffic management: AI algorithms dynamically adjust data routes based on network conditions.

Self-healing networks: Automatically detect and work around failures.

Energy-saving routing: Optimizes network use to cut down energy consumption.

In telecom, AI in the transport layer guarantees high availability, low latency, and cost-efficient connectivity, all critical for real-time services, like remote surgeries or autonomous driving.

f) Data/Knowledge Infrastructure and Intelligence Everywhere

At the base of this architecture are two vital pillars:

- Data/Knowledge Infrastructure

This is the nervous system of AI native design — allowing for data collection, storage, processing, and sharing.

Key elements include:

Unified data lakes and knowledge graphs

Metadata management and ontology-based models

Data pipelines for both training and inference

Federated learning frameworks for distributed AI

This layer ensures no data silos exist, letting insights flow freely between different network domains.

- Intelligence Everywhere

This notion means embedding AI-driven intelligence throughout every layer — from devices all the way to the cloud.

It encompasses:

AI at the edge for quick inference

AI in the core for large-scale analysis

AI in management systems for orchestration

AI in applications for tailored user experiences

In essence, “Intelligence Everywhere” means every piece can sense, decide, and act on its own, creating a cohesive, self-evolving system.

- End-to-End AI Integration: How the Layers Connect

Each layer of the AI native architecture communicates back and forth with the others. For example:

Applications provide performance feedback to the management layer, which helps with AI-based optimization.

The cloud layer offers computational power for training AI models used in orchestration and access layers.

Data infrastructure supports all layers, ensuring ongoing learning through shared insights.

This end-to-end AI integration forms a closed feedback loop, allowing the entire system to continuously learn, adapt, and optimize.

- Benefits of the AI Native Architecture

Embracing a Generic AI Native Architecture brings several clear advantages for both telecom and business settings:

Operational Benefits

Zero-touch automation with self-optimizing networks

Real-time orchestration and service assurance

Predictive maintenance and proactive issue management

Business Benefits

Faster service monetization using AI insights

Enhanced customer experiences through contextual analysis

Cost efficiency from automated resource management

Technical Benefits

Scalable and modular designs for cloud-native setups

AI integrated at every level for adaptable intelligence

Data-informed decisions across dispersed domains

- Example: AI Native Architecture in Telecom Use Case

Imagine a 5G-powered smart city network:

Transport layer: AI dynamically directs data traffic from IoT sensors.

Cloud infrastructure: Edge AI quickly processes video feeds for minimal delay.

Access and mobility: AI ensures smooth transitions between cells for autonomous vehicles.

Management layer: AI orchestrates network segments for public safety and traffic management.

Applications layer: City dashboards display real-time insights.

This interconnected system runs on its own, constantly learning and optimizing — a true demonstration of AI native design.

- Future Outlook: Towards AI-Empowered 6G Networks

As we head toward 6G, AI is set to evolve from just an optimization tool to becoming the core operations fabric of telecom systems. Future architectures will feature:

Shared AI across multiple operators

Self-evolving AI models that adjust based on real-world feedback

Complete automation from edge devices to cloud management

The Generic AI Native Architecture thus serves as the blueprint for future intelligent, autonomous networks.

Conclusion: The Foundation for Autonomous Intelligence

The Generic AI Native Architecture lays out a solid vision for embedding AI at every level of telecom — creating networks that are adaptive, intelligent, and self-sustaining.

By putting intelligence everywhere — from data infrastructure to applications — businesses can tap into unmatched agility, resilience, and innovation.

For telecom operators, adopting this architecture is more than just a tech upgrade; it’s a step towards becoming truly AI-native enterprises that can support the smart, connected world of the future.